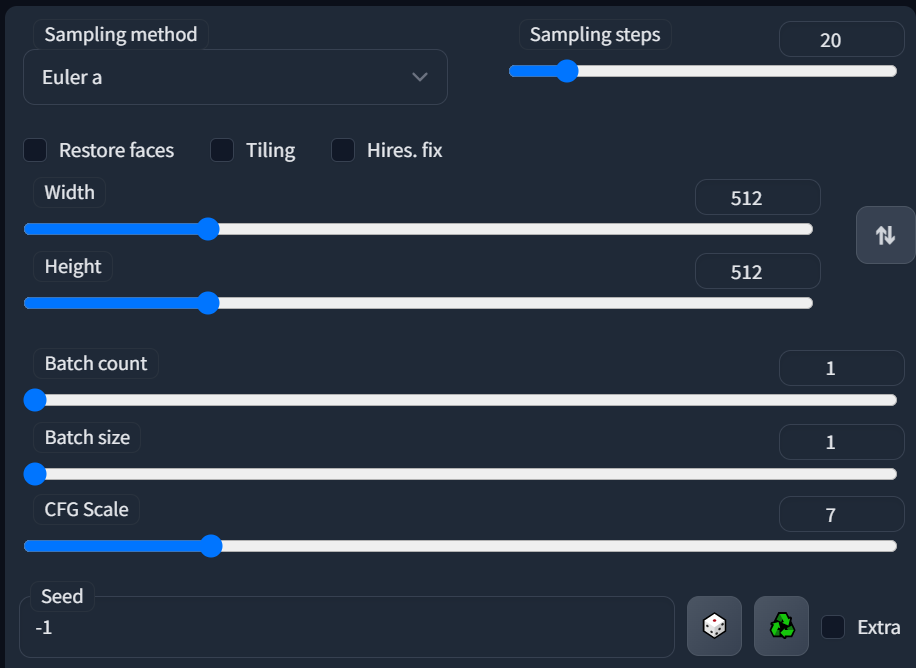

Samplers/Sampling Method

Samplers are basically an algorithm to determine how the image is calculated. The samplers will influence the end result of the image, and can make your images wildly different. In some AI platforms, they are called Schedulers. The reason why is that schedulers/samplers use noise in different manners to progressively move towards a denoised image. Each sampler may work better with different settings like steps, cfg, and prompt. In fact with some samplers you may not see any difference at 30 steps or 70 steps! Samplers may also increase the amount of time it takes to generate an image.

This tweet explains it concisely and shows how mysterious this technology actually is: https://twitter.com/iScienceLuvr/status/1564847717066559488

Euler A is known as the most "Creative" sampler. Think of this as like an idea generator. This is why many folks use Euler as the first sampler in their creative process. It can try a large number of different prompt ideas across a broad set of interpretations. Great for when you are figuring out a concept.

DDIM is a faster sampler, often used for inpainting.

DPM has many different types of samplers, and many can be slower! DPM++ 2M is known as the faster sampler of the group.

For more sampler nerdery, check out this Reddit post for image comparison images:

Sampler / step count comparison with timing info from StableDiffusion

Sampling Steps

Steps take your creation from noise/static to a generated image. Depending on the Sampler used, you may get different results. The more steps you use, in general, the high quality the image will get, but also the longer the generation will take. Larger amounts of steps can create more detailed images. However, it can be easy to compensate with more steps than necessary, some creators insist that high steps are often not needed depending on your prompt.

Restore Faces

People have mixed success with this option. This option can make more generic faces, perhaps even airbrushed or bland. For better results, you may try upscaling and inpainting the face instead. This option works best with Realistic faces, not anime or painting.

Tiling

Used for creating repeating images, like a wallpaper.

Hi-res Fix

Hi-Res fix helps you eliminate deformities and artifiacts that result when generating at higher resolutions. Very useful at different aspect ratios of image. A good in between step before upscaling.

More info on Hi-Res settings can be found here: https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/6509

Width/Height (Resolution)

For best results, start in the same resolution as the model and server you are working with. 512x512 for 1.5 and 768x768 for 2.1.

Depending on how the model you are using was trained, you may have good results with other aspect ratios. If you start getting duplicate faces, hands, etc, it may work to try the Hi-Res Fix.

Batch Count

The number of batches to run. Each batch is processed in paralell on the GPU, which means there may be similarities in batches. It can also speed up large generation runs.

Batch Size

The number of images created in a batch. Note that depending on your server size you may run into issues with vRAM.

For example:

- "Batch count = 8" with "batch size = 1", vs.

- "Batch count = 1" with "batch size = 8", your generations could be up to 3x as fast.

CFG Scale

CFG, or "Classifier-Free Guidance" is one of the main parameters in Stable Diffusion. One way to think about it is that CFG scale is "how strict" the diffusion will be according to the prompt. The lower the CFG, the more freedom the diffuser model will have to create the image. The higher the CFG, the harder it will try to match your prompt. Note, what you think your prompt is may be wildly different from what the diffuser model thinks your prompt means! So cranking CFG in that situation would make things "worse". In particular, prompts on certain things like color dynamics or lighting could make your image a bit "deepfried" by the diffuser overcompensating from CFG.

Seed

The seed determines the initial noise that will be used to create your image. When seed is set to -1, it will randomly generate a seed. If you want to recreate your image use the same seed. You can find the seed of an old image with the PNG NFO tab. Note that changes in resolution or settings may produce wildly different images with the same seed, so make sure to keep it as close as possible if recreating an image.

The "extras" box is available when you checkmark the box, and it will give you additional seed variation options. This includes the ability to morph between seeds.