Wan2.2 Animate is a model designed for ComfyUI that creates smooth, frame-by-frame video sequences guided by an input video. It takes the motion and structure of an existing clip and uses AI generation to produce a new version with a different subject or style.

RunDiffusion now hosts a workflow built around the Wan2.2 Animate model, making it possible to run this process entirely in the cloud without any local setup.

With this workflow you can:

- Upload a short input video (provides motion and pose)

- Upload a reference image (the character or subject you want to swap in)

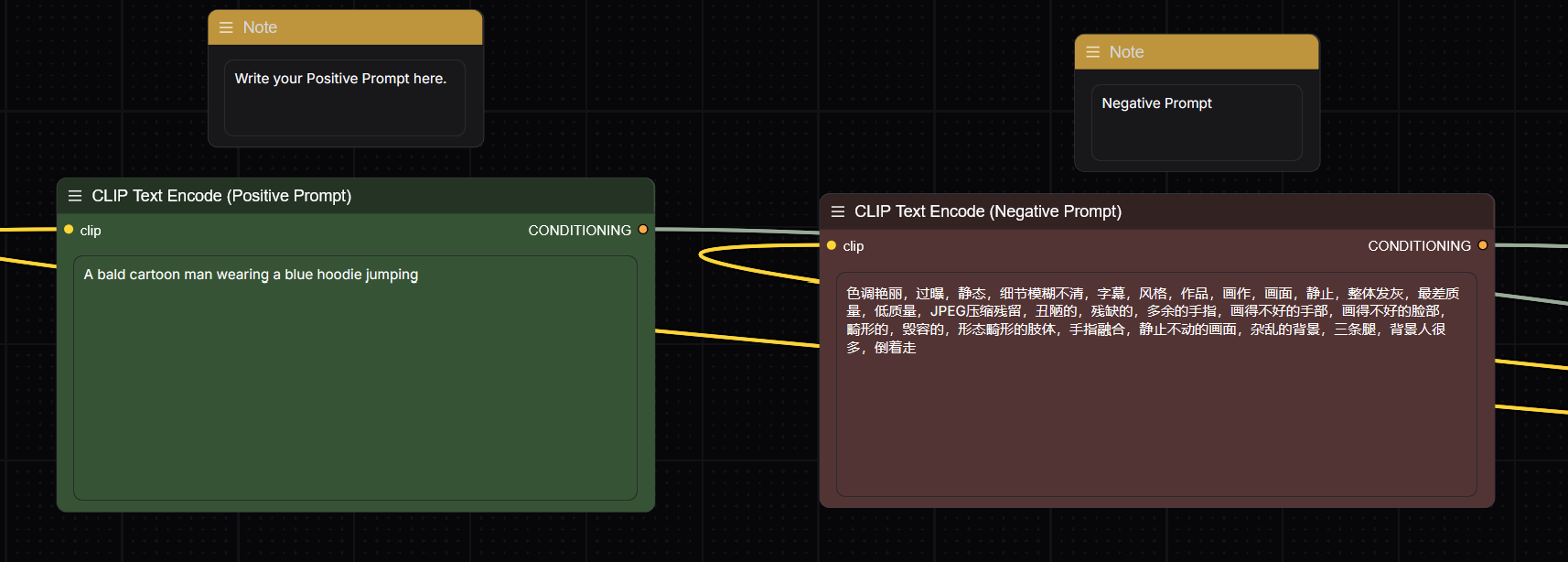

- Enter a prompt describing style, appearance and scene.

The output is a new video generated by the Wan2.2 Animate model where the subject from the original video is replaced according to your reference image and prompt.

How to Use the Wan2.2 Animate Model on RunDiffusion

You can launch and run the Wan2.2 Animate model inside ComfyUI on RunDiffusion:

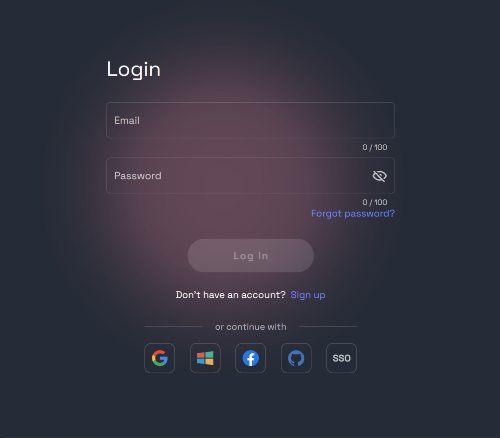

Go to https://app.rundiffusion.com/login and log in

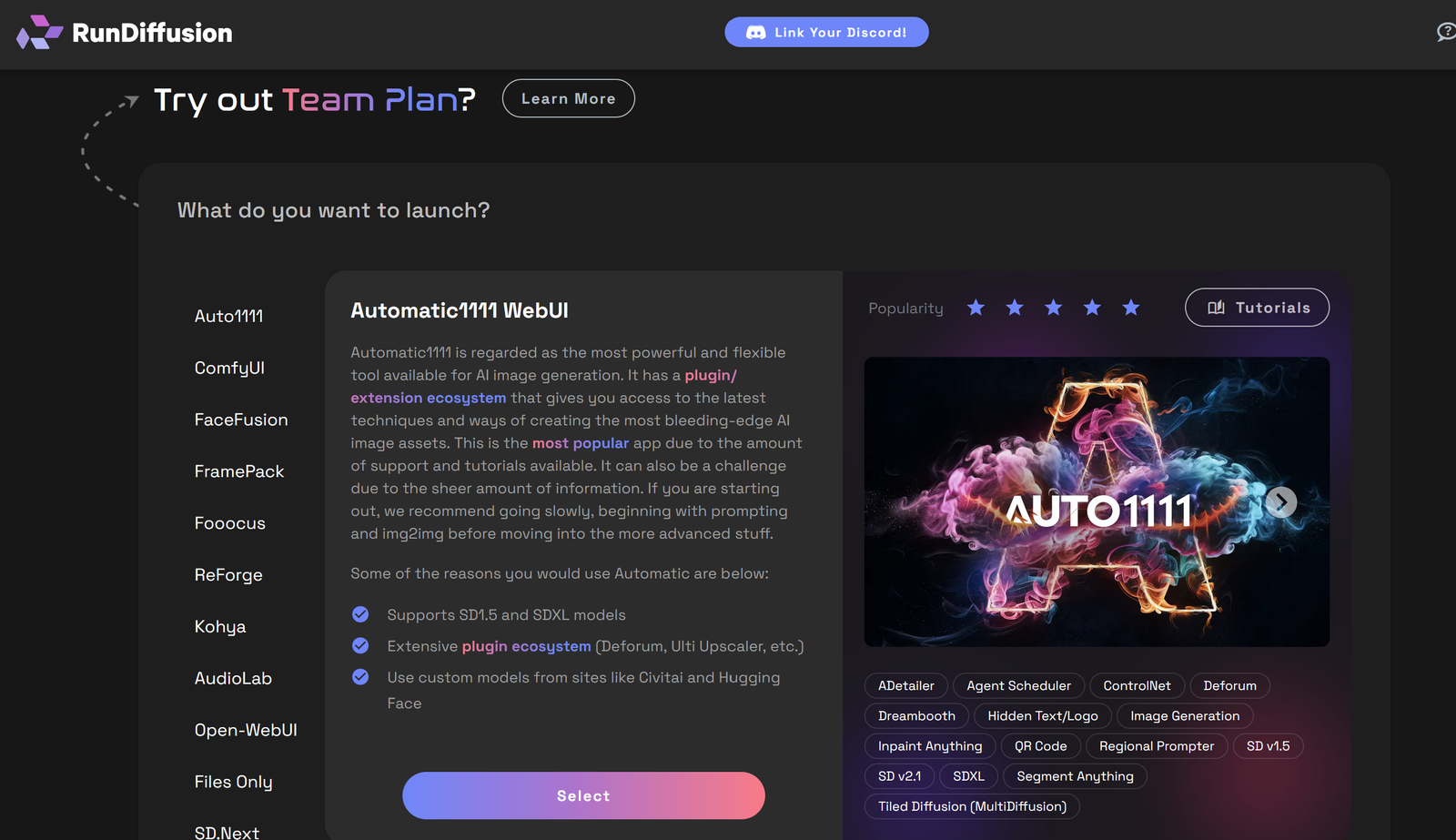

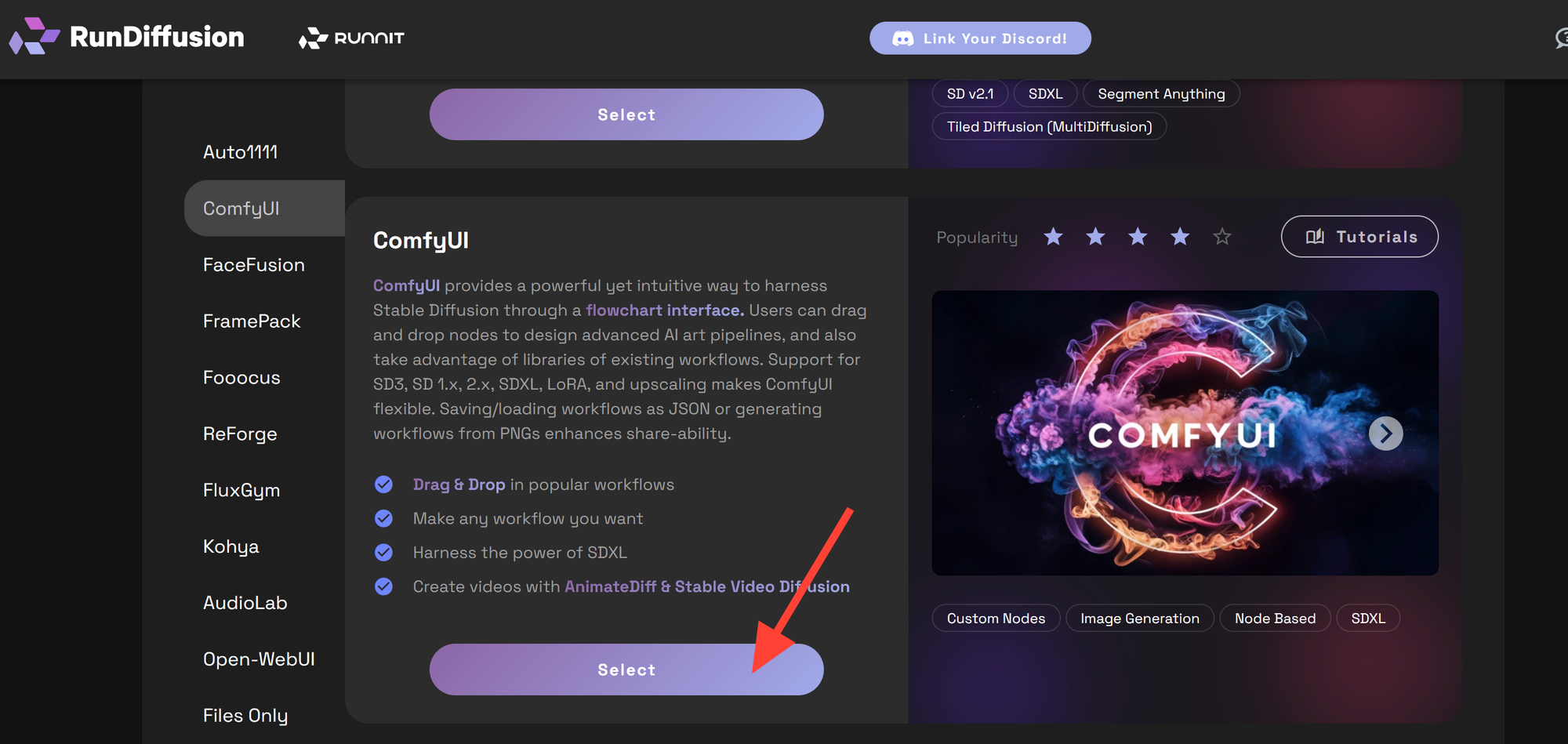

Navigate to the Open Source Apps

On the left side bar select ComfyUI app and select it

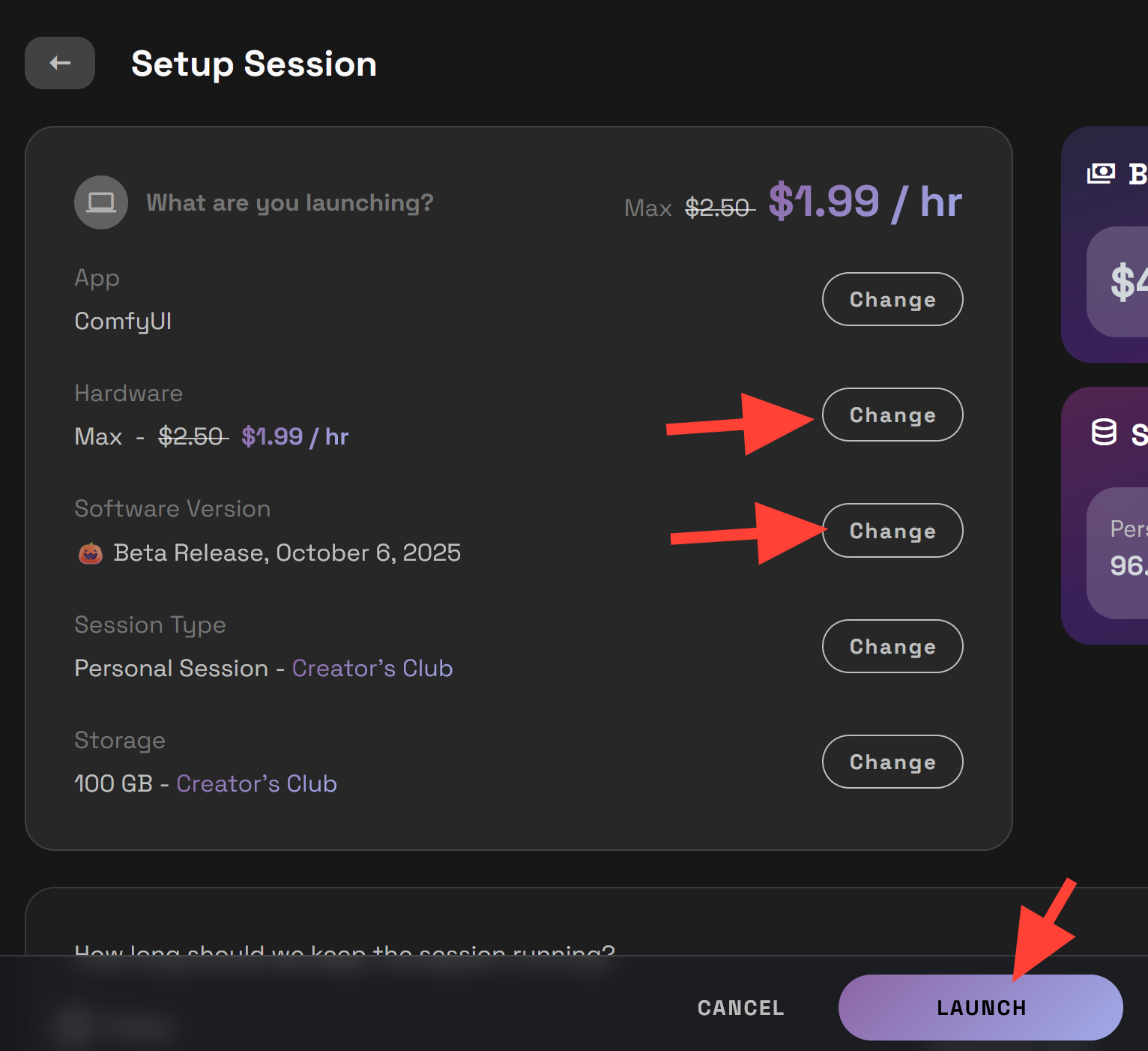

Select the Beta Build and Max server and click Launch.

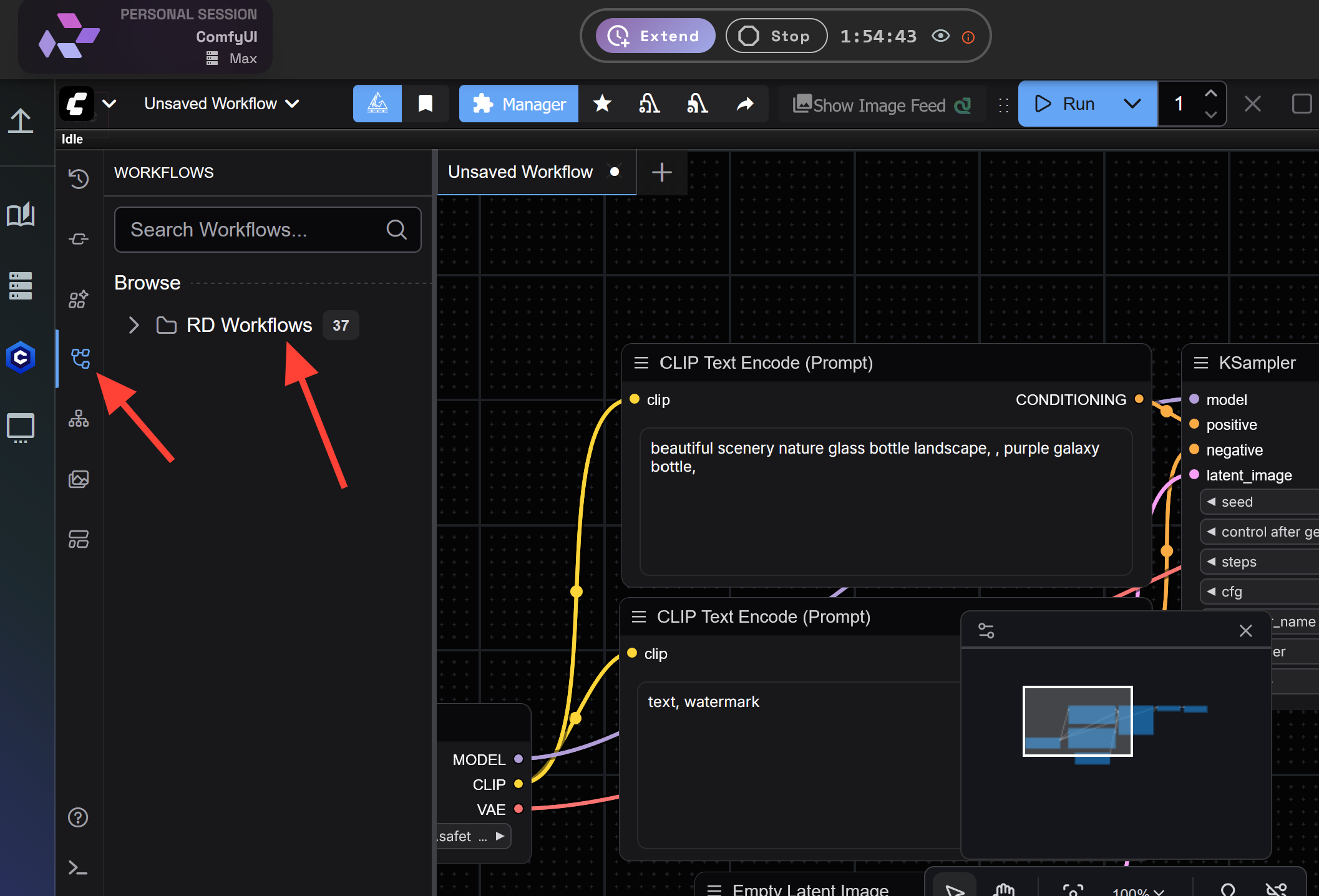

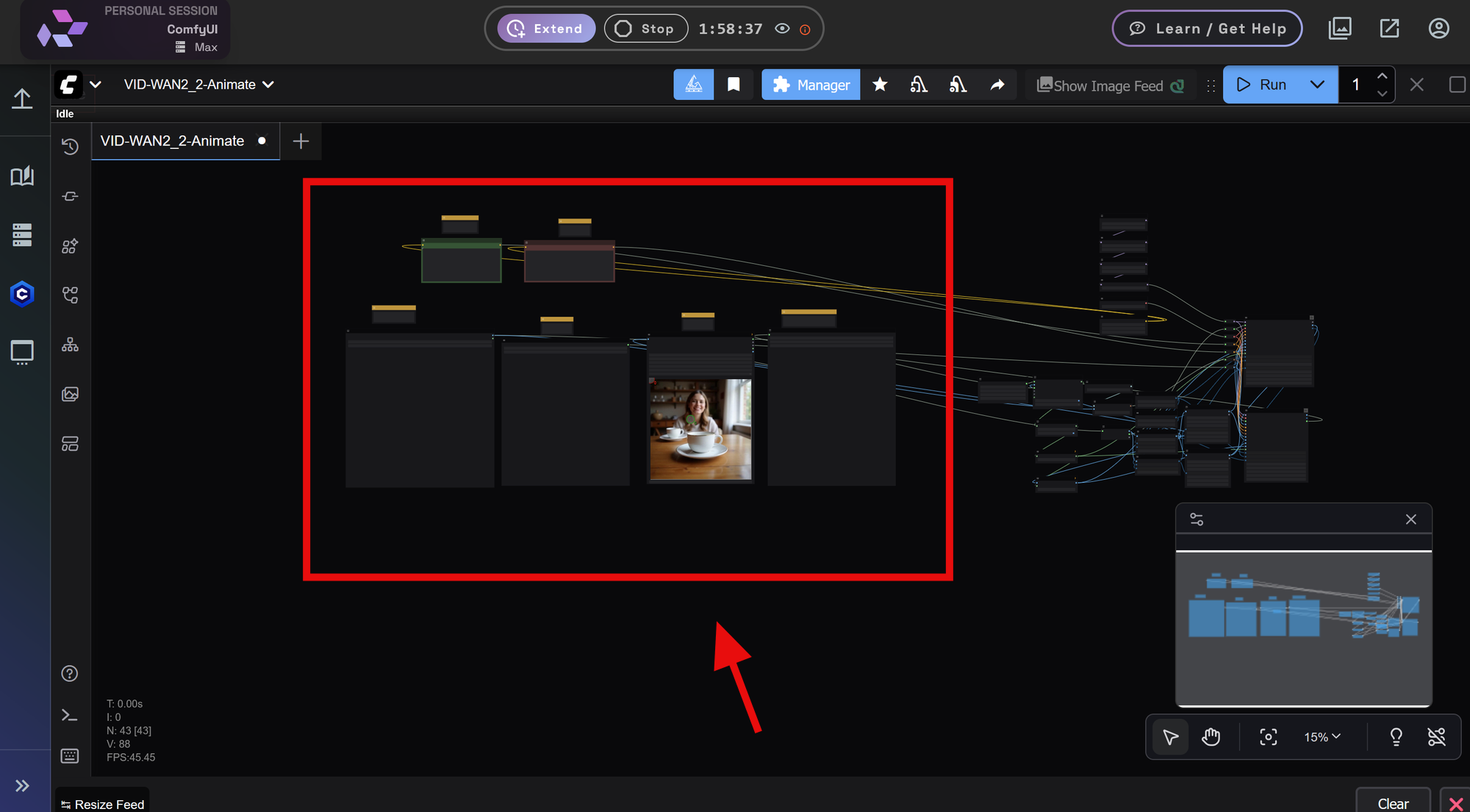

Click on the Workflow tab on the left side and click on the RD Workflows folder.

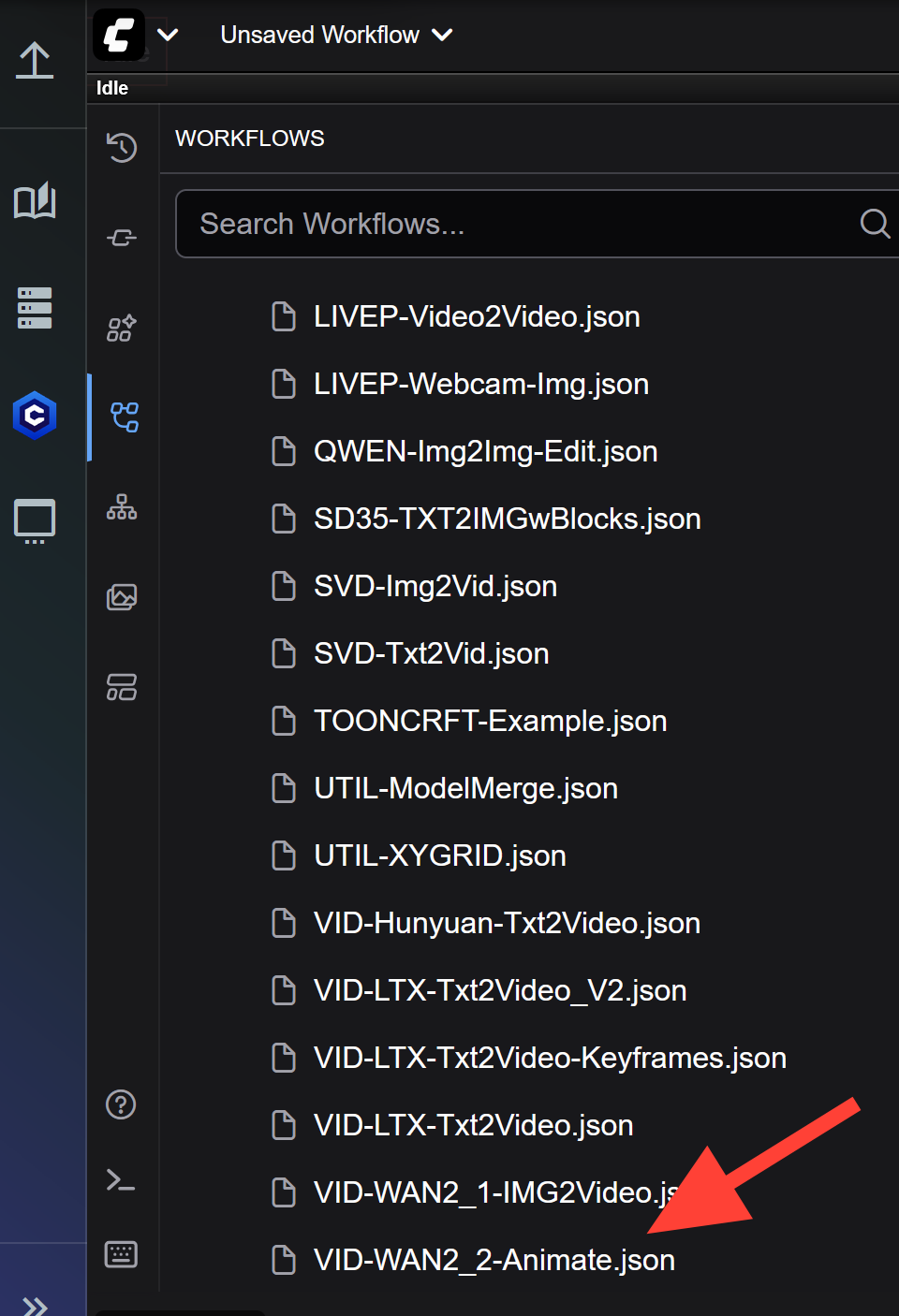

Scroll down to the VID-WAN-Animate Workflow and click on it.

The workflow is split into two parts. The left side has the fields with notes to help you know what to do with each of them. You can ignore the right side for now as it is not necessary to use them to run

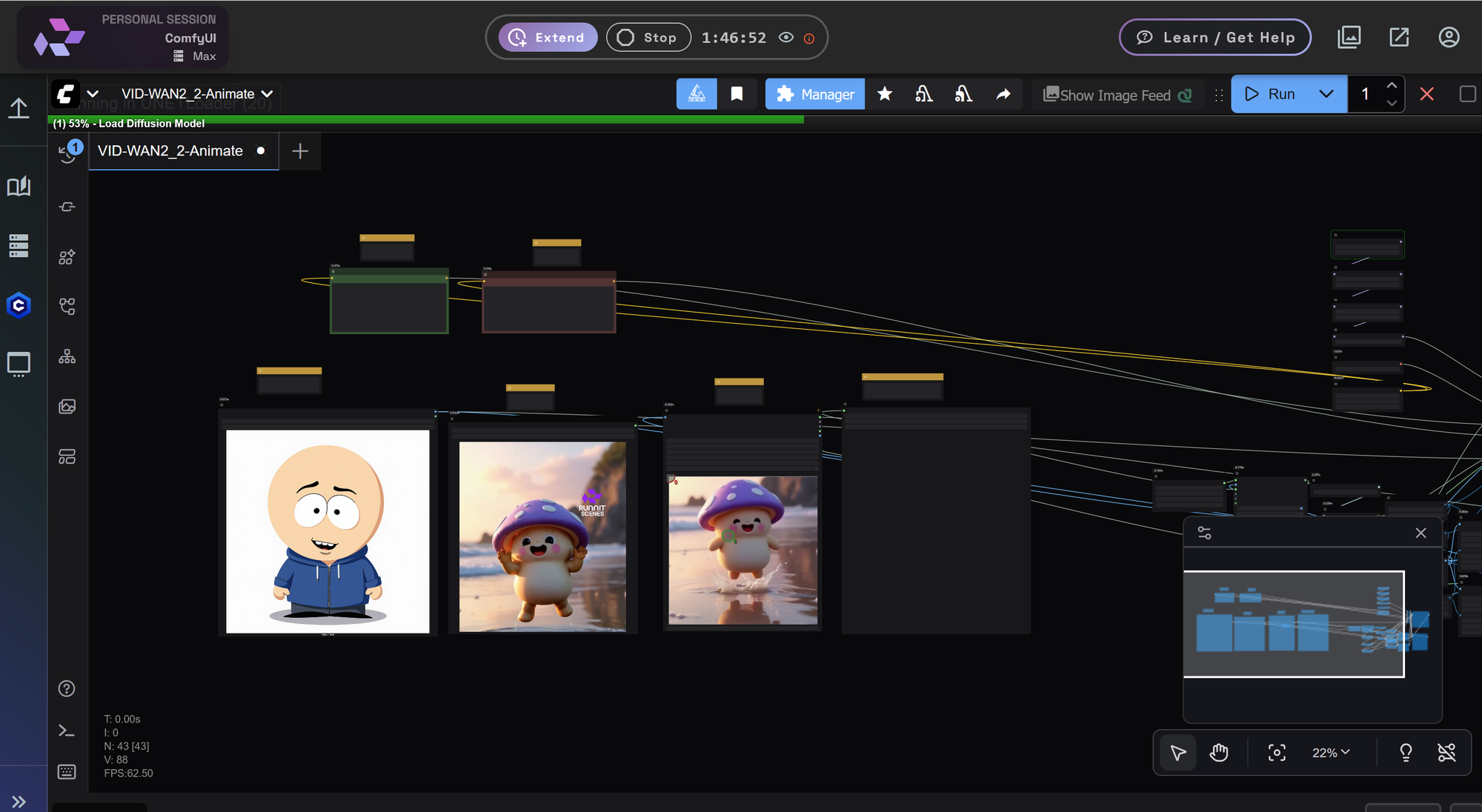

Upload an Image with the Load Image Node. this is the character you want to swap into a video.

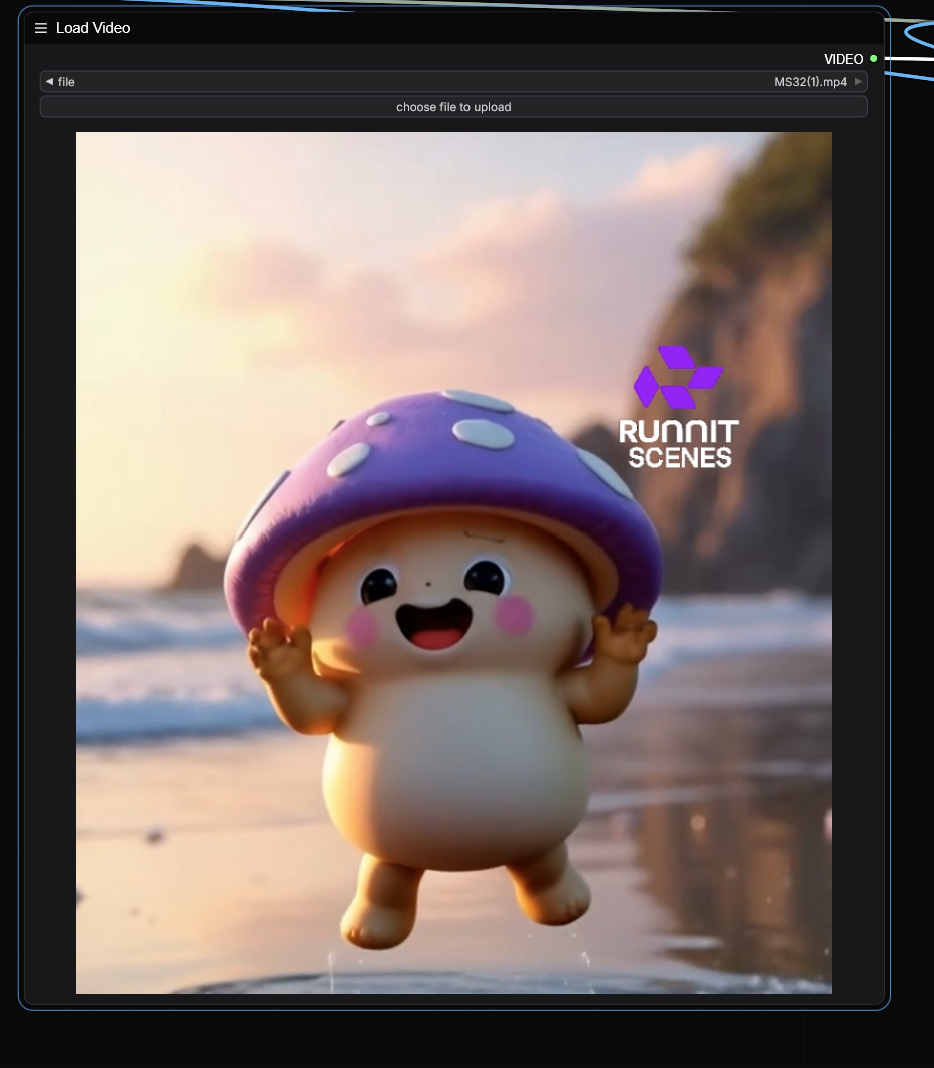

Choose a file to upload by selecting the Load Video node. My video resolutions are set to 640x640 so it will automatically trim this video.

Enter a Prompt you can ignore the Negative prompt for now.

Click Run. Advanced users may adjust the masking or resolution settings.

Our Finished video.

What This Model Can Be Used For

The Wan2.2 Animate model is especially useful for:

- Replacing a person or character in a video with a new one defined by a reference image

- Creating stylized versions of real video clips

- Experimenting with video-based AI generation inside ComfyUI

- Producing short clips with consistent motion but different characters or looks

Considerations

- Works best with shorter clips (under 10 seconds)

- Reference images with clear details and good lighting produce better results

- This workflow generates new frames based on your input video; it does not simply edit the original video