For these features, Creator's Club is required. The Creator's Club allows you to upload all kinds of interesting custom models and other trained objects onto private storage for your use.

Below we have an overview of the basic types of trained objects you can use in Automatic1111. Instructions are included for installation and usage.

What are these different models!?

Well-Researched Comparison of Training Techniques (Lora, Inversion, Dreambooth, Hypernetworks) from StableDiffusion

Embeddings / Textual Inversions

Textual Inversion is a technique for capturing novel concepts from a small number of example images in a way that can later be used to control text-to-image pipelines. It does so by learning new ‘words’ in the embedding space of the pipeline’s text encoder. These special words can then be used within text prompts to achieve very fine-grained control of the resulting images.

https://huggingface.co/docs/diffusers/training/text_inversion

Directory for loading: log in with sduser, put files in 'embeddings' folder in the base directory. These will persist between sessions and load on boot.

For training Embeddings/Textual Inversions, please see this great Reddit post:

Detailed guide on training embeddings on a person's likeness

by u/Zyin in StableDiffusion

Hypernetworks

In computer science, a hypernetwork is technically a network that generates weights for a main network. In other words, it is believed that the main network’s behaviour is the same with other neural networks because it learns to map some raw inputs to their desired targets while the hypernetwork takes a set of inputs that contain information about the structure of the weights and generates the weight for that layer.

https://metanews.com/what-are-hypernetworks/

Directory for loading: log in with webui, open the 'models' folder, put files in 'hypernetworks' folder in the base directory. Note that these will be deleted when you shut down your session.

LoRa

LoRA: Low-Rank Adaptation of Large Language Models is a novel technique introduced by Microsoft researchers to deal with the problem of fine-tuning large-language models. Powerful models with billions of parameters, such as GPT-3, are prohibitively expensive to fine-tune in order to adapt them to particular tasks or domains. LoRA proposes to freeze pre-trained model weights and inject trainable layers (rank-decomposition matrices) in each transformer block. This greatly reduces the number of trainable parameters and GPU memory requirements since gradients don't need to be computed for most model weights. The researchers found that by focusing on the Transformer attention blocks of large-language models, fine-tuning quality with LoRA was on par with full model fine-tuning while being much faster and requiring less compute.

https://huggingface.co/blog/lora

Directory for loading: log in with webui, open the 'models' folder, put files in 'Lora' folder (note capital L) in the base directory. Note that these files are wiped when the server session is shut down.

Using Custom Objects in Automatic1111

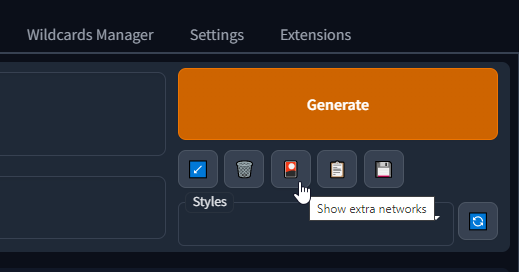

To see what you have installed, navigate to the "Show Extra Networks" button underneath the Generate button in Automatic1111's Txt2Img tab.

Here you will see all the custom objects you have installed separated by tab. You can switch between different types, and see the objects that are ready for use. In order to use them, simply click on the object and you will see it injected into your prompt. If you are loading an object while this tab is open, hit refresh and you should see the object load in and be able to be used. This is a great way to confirm you have the right directory.

Weighting

When you load a Hypernetwork or LoRa, you will notice it appears with the prompt injection, but also in pointy brackets and with a number attached! Like this:

<hypernet:discoElysiumStyle_discoElysium:1>This states that we are to use a hypernet, with the name Disco Elysium Style at a weight of 1.

The weighting determines the relative strength of the style. If you go below 1, like 0.8, you will reduce the strength of the hypernetwork. You could think of this as 80% strength, but it's unlikely that is accurate given the complexity of Automatic1111's prompting engine. Sometimes it's more an alchemy than a science!

Mixing and Matching

Feel free to use multiple embeddings, loras, hypernetworks, put them together, find what works and what doesn't. Share your results on the Discord! The best way to get interesting results is to experiment, experiment, experiment.